The SEALS project is dedicated to the evaluation of semantic web technologies. To that extent, it is creating a platform for easing this evaluation, organising evaluation campaigns, and building the community of tool providers and tool users around this evaluation activity.

OAEI and SEALS are closely coordinated in the area of ontology matching. The SEALS platform covers other areas as well. We plan to integrate progressively the SEALS platform within OAEI evaluation. Starting in 2010, three tracks (benchmark, anatomy, conference) will take advantage of the SEALS platform in its first version.

The SEALS platform will be delivered in two steps to participants:

In its first simple presentation, the SEALS platform will allow matchers to be evaluated as web services. Participants will thus have to implement a very simple web service, provide its URL to the platform and get results.

This first primitive version of the platform does only this. It does not keep track of what has been done, does not provide extra support, etc. It allows participant to be sure that they implement the correct interface and test their system. This will evolve with time and further services will be provided as soon as they are available.

The instructions for implementing your matcher as a web service are provided in this tutorial. Following the instructions in the tutorial will allow you to test whether you correctly implemented the interface. It will also allow you to use a first service of the SEALS platform to test your system. It is highly advised to implement the second part of the tutorial (the MyAlignmentWS part) so that your tool will be ready for the deployment evaluation part.

The evaluation service is available at http://seals.inrialpes.fr/platform/. We are updating the functionality from time to time, in case of problems do not hesitate to contact us.

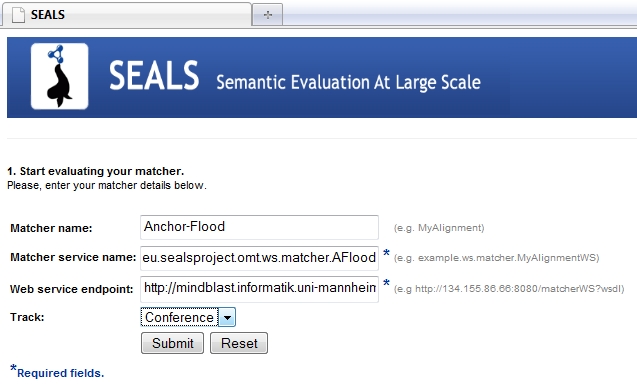

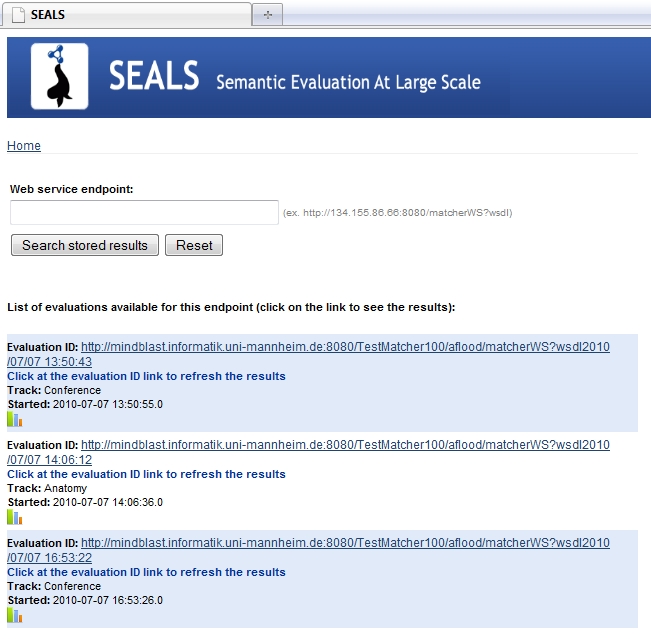

Processing an evaluating with the platform consists of providing the name of the system, the endpoint (URL) of the web service and selecting the test set. Clicking on Submit will launch the evaluation.

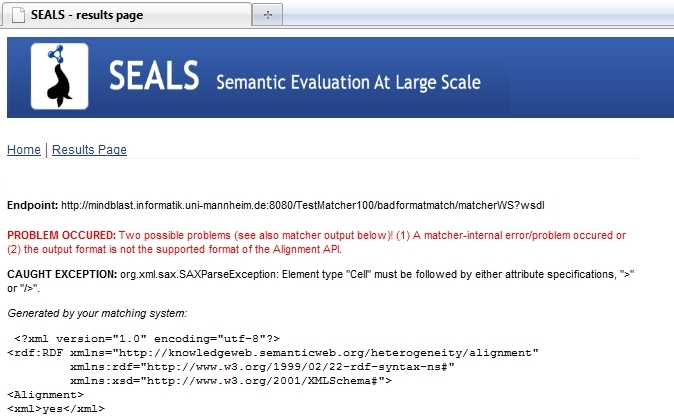

Before starting an evaluation, some validation is done, in order to verify that the namespace and endpoint are correct and the tool delivers alignments in the correct Alignment format.

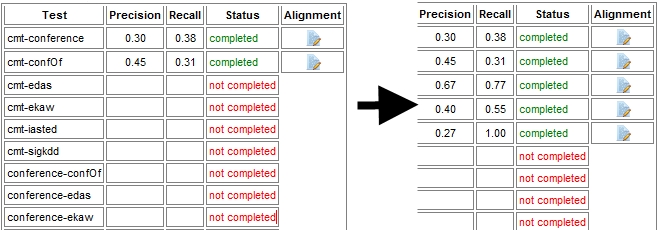

If everything is ok, then the evaluation is launched and the evaluation results are shown.

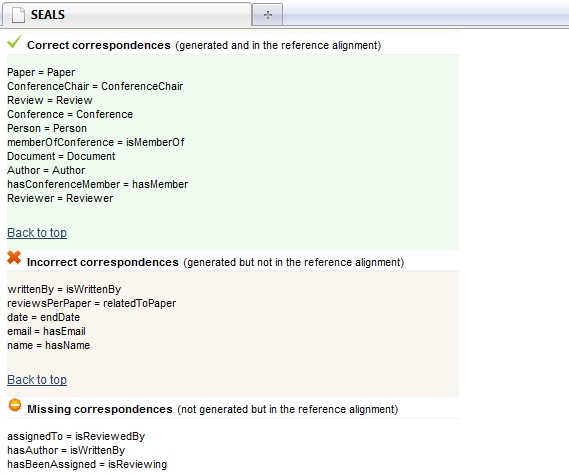

For each alignment, details about the correct, incorrect and missing correspondences are provided.

The deployment based evaluation relies on the Alignment API, so matchers have to be made available under Java. Doing so is described in detail in this tutorial. However, if you have followed our advice above, then this is already done.

Then, deploying a matcher on the SEALS platform may require additional information than just a jar file. The SEALS platform allows for defining the environment under which your system is run: Operating system, installed databases or resources like WordNet, etc. Your system is provided as a bundled zip file in which a deployment description file tells the platform what to do.

More information about the bundle will be posted soon.

So far, there is no compulsory registration. It is still possible to manifest your interest in the SEALS project and to pre-register your tool for evaluation on the SEALS portal

Later, for the participation commitment, it will be necessary to register and to upload the tool in the SEALS platform.

Do not hesitate to contact Cassia.Trojahn # inrialpes : fr for any questions.