The purpose of this task was to match the Thesaurus of the Netherlands Institute for Sound and Vision (the GTAA) to WordNet and DBPedia. The GTAA is a facetted thesaurus; we use the Name, Person, Location and Subject facets. A description of the task can be found here.

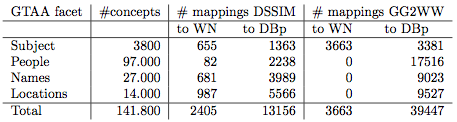

Two participants participated in the VLCR task. The evaluation of the results will focus on the differences between the participants as well as between the alignments of the four facets of the GTAA. The tabel below shows the number of concepts in each resource and the number of mappings returned for each resource pair.

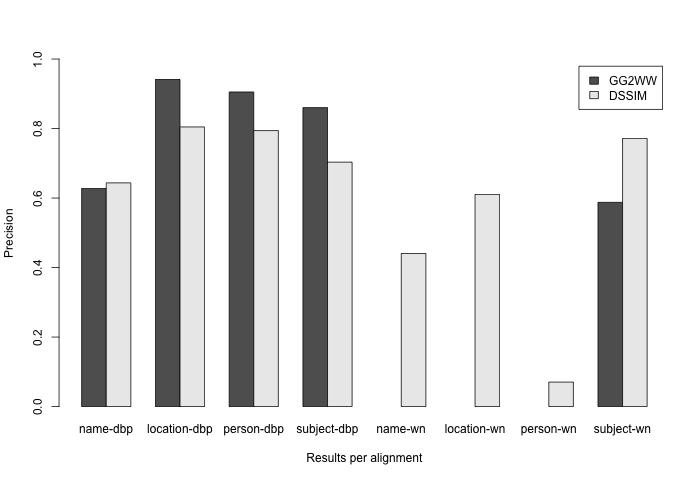

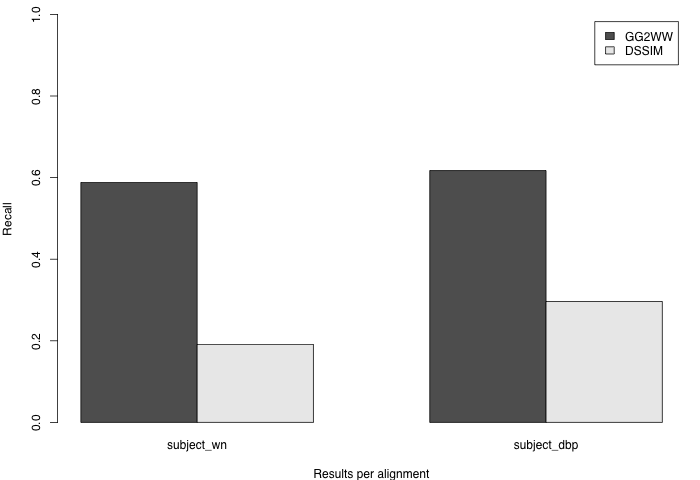

Each GTAA facet and their alignment to WordNet and DBpedia was evaluated separately. To get precision scores for these 8 alignments, we evaluated random samples of between 71 and 97 mappings. For recall scores, we compared the GTAA subject - DBpedia and GTAA subject - WordNet alignments to a reference alignment of 100 mappings that was created for the VLCR evaluation of 2008.

The VLCR task description asked for skos:closeMatch and skos:exactMatch relations between concepts. However, both participants produced only exactMatches. In cooperation with the participants, we have considered using the confidence measures as an indication of the strenght of the mapping: a mapping with a confidence measure of 1 is seen as an exactMatch and a mapping with a confidence measure < 1 is seen as an closeMatch. However, this idea lead to lower precision values for both participants and was therefore abandoned.

A team of 4 raters rated random samples of DSSIM's mappings. Raters. A team of 3 raters rated the GG2WW mappings, where each alignment was divided over two raters. One rater was a member of both teams.

In order to check the inter-rater agreement, 100 mappings were rated by two raters. The agreement was high with a Cohen's kappa of 0.87. In addition, we compared this year's evaluation samples with those of 2008. 120 mappings appeared in both sets, and again the agreement between the scores was high; Cohen's kappa was 0.92.

We would like to thank Willem van Hage for the use of his tools for manual evalatuation of mappings. We gratefully acknowledge the Dutch Institute for Sound and Vision for allowing us to use the GTAA.

Send any questions, comments, or suggestions to:

Initial location of this page: http://www.cs.vu.nl/~laurah/oaei/2009/results.html