Matching systems (matchers) have been evaluated based on reference alignment with regard to their precision, recall, F1-measure performace. Next, we evaluated the degree of alignment incoherence and we also provide brief report about runtimes.

Regarding evaluation based on reference alignment, we first filtered out (from alignments generated using SEALS platform) all instance-to-any_entity and owl:Thing-to-any_entity correspondences prior to computing Precision/Recall/F1-measure because they are not contained in the reference alignment. In order to compute average Precision and Recall over all those alignments we used absolute scores (i.e. TP, FP, and FN). Therefore, resulted numbers can slightly differ with those computed by SEALS platform. Then we computed F1-measure based on average Precision and Recall in a standard way. Finally, we found the highest average F1-measure with thresholding (if possible).

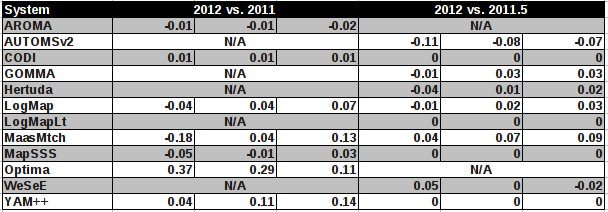

This year we had 18 participants. For overview table please see general information about results. Matchers which participated either in OAEI 2011 or OAEI 2011.5 we provide comparison in terms of highest average F1-measure below.

You can either download all alignments generated by SEALS platform for all test cases where each participant has its own directory. Or you can download subset of all alignment for which there is reference alignment. In this case we provide alignments after some tiny modifications about which we explained above. Allignments are stored as it follows: matcher-ontology1-ontology2.rdf.

Reference alignment contains 21 alignments (testcases), which corresponds to the complete alignment space between 7 ontologies from the data set. This is a subset of all ontologies within this track (16). Total number of testcases is hence 120. There are two variants of reference alignment:

In order to provide some context for understanding matchers performance we made two simple string-based matchers as baselines. Baseline1 is a string matcher based on string equality applied on local names of entities which were lowercased before. Baseline2 enhanced baseline1 with three string operations: removing of dashes, underscore and 'has' words from all local names.

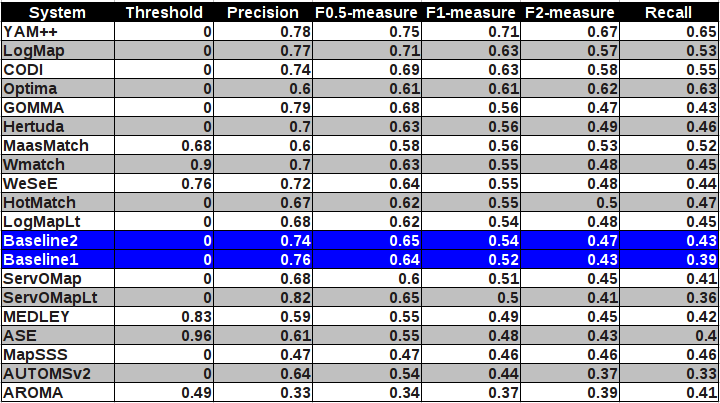

In the table below, there are results of all 18 participants with regard to the reference alignment (ra2). There are precision, recall, F1-measure, F2-measure and F0.5-measure computed for the threshold that provides the highest average F1-measure. F1-measure is the harmonic mean of precision and recall. F2-measure (for beta=2) weights recall higher than precision and F0.5-measure (for beta=0.5) weights precision higher than recall.

Matchers are ordered according to their highest average F1-measure. According to matcher's position with regard to two baselines it can be in one of two basic groups:

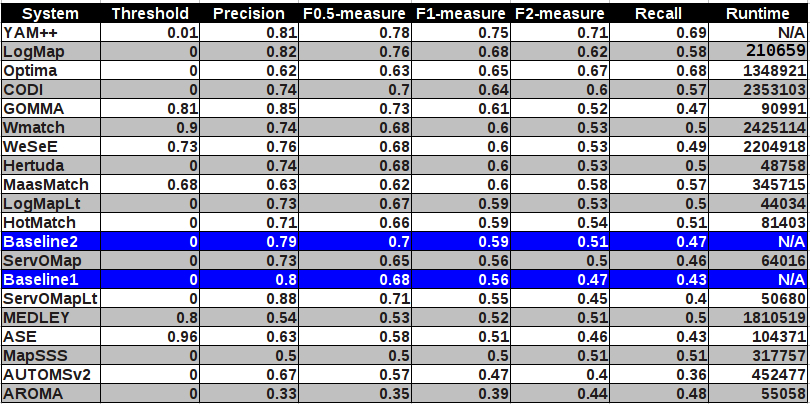

For better comparison with previous years there is also table summarizing performance based on original reference alignment (ra1). In the case of evaluation based on ra1 the results are almost in all cases better by 0.03 to 0.05 points. The order of matchers according to F1-measure is not preserved, e.g. CODI went over Optima.

According to matcher's position with regard to two baselines it can be in one of three groups:

Note: ASE and AUTOMSv2 matchers did not generate 3 out of 21 alignments. Thus, results for them are just approximations.

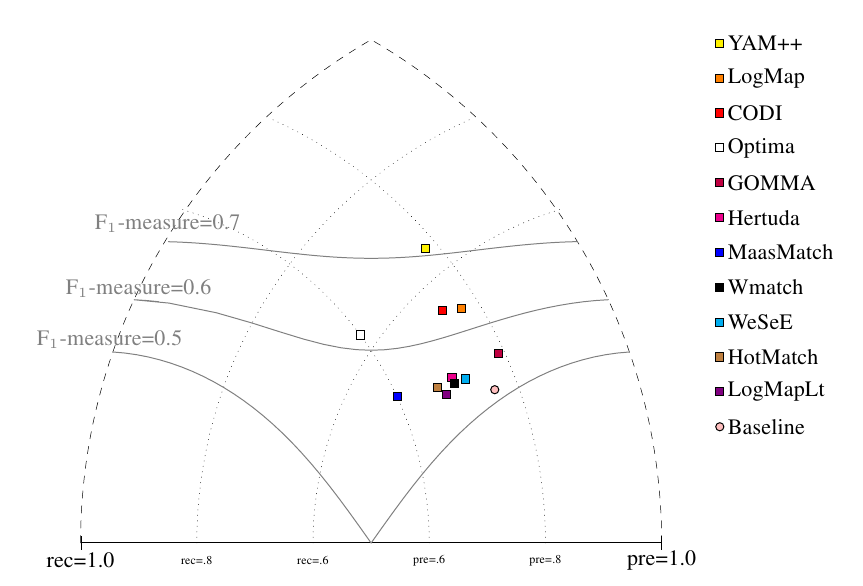

Performance of matchers regarding an average F1-measure is visualized in the figure below where matchers of participants from first two groups are represented as squares. Baselines are represented as circles. Horizontal line depicts level of precision/recall while values of average F1-measure are depicted by areas bordered by corresponding lines F1-measure=0.[5|6|7].

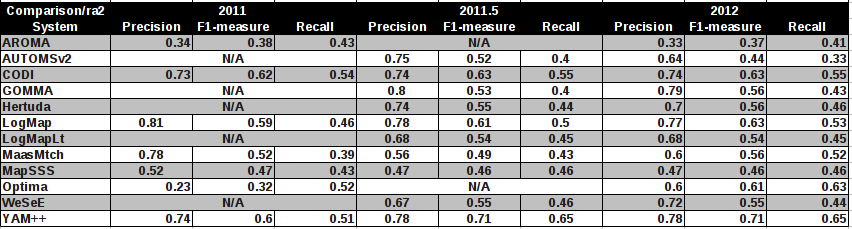

Table below summarize performance results of matchers participated in more than one edition of OAEI, conference track with regard to reference alignment ra2.

The highest improvement achieved Optima (0.29 increase wrt. F1-measure) and YAM++ (0.11 increase wrt. F1-measure) between OAEI 2012 and OAEI 2011, see Table below.

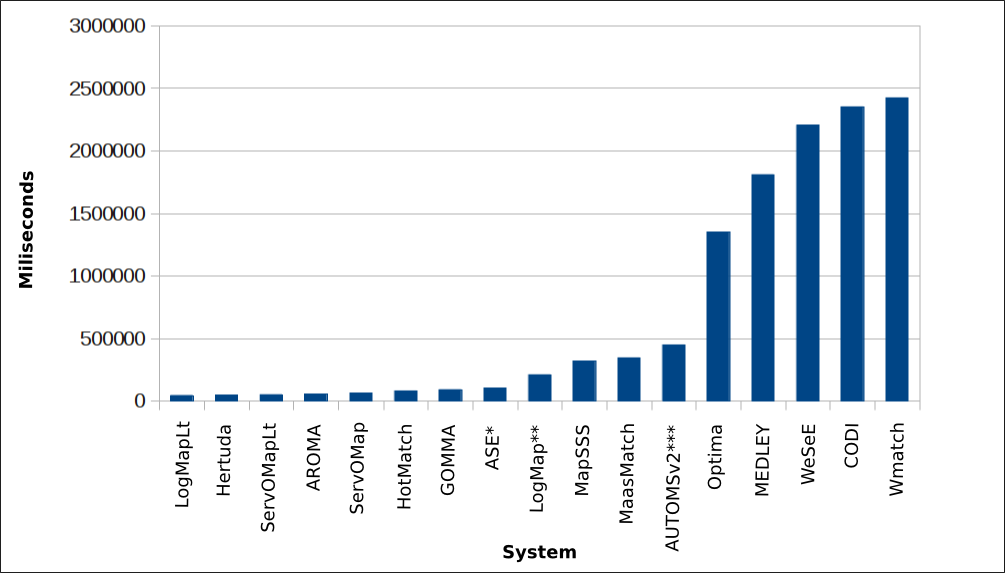

We measured total runtime on generating all 120 alignments. It was executed on a laptop with Unbuntu machine running on Intel Core i5, 2.67GHz and 4GB RAM. Since the conference track consists of rather small ontologies (up to 140 classes) we set a time limit of five hours. In case the system did not finish it in time (after 5 hours), we stopped the experiments.

Total runtimes of 18 matchers are in the plot below. Experiment with YAM++ was stopped after 5 hours time limit.

There are four matchers which finished all 120 testcases within 1 minute (LogMapLt - 44 seconds, Hertuda - 49 seconds, ServOMapLt - 50 seconds and AROMA - 55 seconds). Next four matchers need less than 2 minutes (ServOMap, HotMatch, GOMMA and ASE). 10 minutes are enough for next four matchers (LogMap, MapSSS, MaasMatch and AUTOMSv2). Finally, 5 matchers needed up to 40 minutes to finish all 120 testcases (Optima 22 min, MEDLEY - 30 min, WeSeE - 36 min, CODI - 39 min and Wmatch - 40 min).

Further notes:

As in the previous years, we apply the Maximum Cardinality measure to evaluate the degree of alignment incoherence. Details on this measure and its implementation can be found in [1]. The results of our experiments are depicted in the following table. In opposite to the previous year, we only compute the average for all test cases of the conference track for which there exists a reference alignment. The presented results are thus aggregated mean values for 21 test cases. In some cases we could not compute the degree of incoherence due to the combinatorial complexity of the problem. In this case we were still able to compute a lower bound for which we know that the actual degree is (probably only slightly) higher. Such results are marked with a *. Note that we only included those systems in our evaluation, that generated alignments for all test cases of the subset with reference alignments.

| Matcher | AROMA | CODI | GOMMA | Hertuda | HotMatch | LogMap | LogMapLt | MaasMatch * | MapSSS | MEDLEY * | Optima | ServOMap | ServOMapLt | WeSeE | Wmatch | YAM++ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Alignment Size | 20.9 | 11.1 | 8.2 | 9.9 | 10.5 | 10.3 | 9.9 | 83.1 | 14.8 | 55.6 | 15.9 | 9 | 6.5 | 9.4 | 9.9 | 12.5 |

| Incoherence Degree | 19.4% | 0% | 1.1% | 5.2% | 5% | 0% | 5.4% | 24.3% | 12.6% | 30.5% | 7.6% | 3.3% | 0% | 3.2% | 6% | 0% |

| Incoherent Alignments | 18 | 0 | 2 | 9 | 9 | 0 | 7 | 20 | 18 | 20 | 12 | 5 | 0 | 6 | 10 | 0 |

Four matching systems can generate coherent alignments. These systems are CODI, LogMap, ServOMapLt, and YAM++. However, it is not always clear whether this is related to a specific approach that tries to ensure the coherency, or whether this is only indirectly caused by generating small and highly precise alignments. Especially, the coherence of the ServOMapLt alignments might be caused by such an approach. The system generates overall the smallest alignments. Because there are some systems that cannot generate a coherent alignment for alignments that have in average a size from 8 to 12 correspondences, it can be assumed that CODI, LogMap, and YAM++ have implemented specific coherency-preserving methods. Those system generate also between 8 to 12 correspondences, however, none of their alignments is incoherent. This is an important improvement compared to the previous years, for which we observed that only one or two systems managed to generate (nearly) coherent alignments.

Contact address is Ondřej Šváb-Zamazal (ondrej.zamazal at vse dot cz).

[1] Christian Meilicke. Alignment Incoherence in Ontology Matching. PhD thesis at University Mannheim 2011.