Web page content

We have three variants of crisp reference alignments (the confidence values for all matches are 1.0). They contain 21 alignments (test cases), which corresponds to the complete alignment space between 7 ontologies from the OntoFarm data set. This is a subset of all ontologies within this track (16) [4], see OntoFarm data set web page.

For each reference alignment we provide three evaluation variants

rar2 M3 is used as the main reference alignment for this year. It will also be used within the synthesis paper.

| ra1 | ra2 | rar2 | |

| M1 | ra1-M1 | ra2-M1 | rar2-M1 |

| M2 | ra1-M2 | ra2-M2 | rar2-M2 |

| M3 | ra1-M3 | ra2-M3 | rar2-M3 |

Regarding evaluation based on reference alignment, we first filtered out (from the generated alignments) all instance-to-any_entity and owl:Thing-to-any_entity correspondences prior to computing Precision/Recall/F1-measure/F2-measure/F0.5-measure because they are not contained in the reference alignment. In order to compute average Precision and Recall over all those alignments, we used absolute scores (i.e. we computed precision and recall using absolute scores of TP, FP, and FN across all 21 test cases). This corresponds to micro average precision and recall. Therefore, the resulting numbers can slightly differ with those computed by the SEALS platform (macro average precision and recall). Then, we computed F1-measure in a standard way. Finally, we found the highest average F1-measure with thresholding (if possible).

In order to provide some context for understanding matchers performance, we included two simple string-based matchers as baselines. StringEquiv (before it was called Baseline1) is a string matcher based on string equality applied on local names of entities which were lowercased before (this baseline was also used within anatomy track 2012) and edna (string editing distance matcher) was adopted from benchmark track (wrt. performance it is very similar to the previously used baseline2).

In the tables below, there are results of all 10 tools with regard to all combinations of evaluation variants with crisp reference alignments. There are precision, recall, F1-measure, F2-measure and F0.5-measure computed for the threshold that provides the highest average F1-measure computed for each matcher. F1-measure is the harmonic mean of precision and recall. F2-measure (for beta=2) weights recall higher than precision and F0.5-measure (for beta=0.5) weights precision higher than recall.

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.84 | 0.77 | 0.69 | 0.63 | 0.59 |

| StringEquiv | 0.0 | 0.88 | 0.76 | 0.64 | 0.55 | 0.5 |

| Matcha | ||||||

| LogMapLt | 0.0 | 0.84 | 0.76 | 0.66 | 0.58 | 0.54 |

| LSMatch | 0.0 | 0.88 | 0.76 | 0.64 | 0.55 | 0.5 |

| ATMatcher | 0.51 | 0.81 | 0.75 | 0.68 | 0.62 | 0.59 |

| ALIOn | 0.0 | 0.75 | 0.54 | 0.38 | 0.29 | 0.25 |

| AMD | 0.0 | 0.87 | 0.76 | 0.64 | 0.55 | 0.5 |

| ALIN | 0.0 | 0.88 | 0.79 | 0.68 | 0.59 | 0.55 |

| LogMap | 0.0 | 0.84 | 0.79 | 0.72 | 0.66 | 0.63 |

| KGMatcher+ | 0.0 | 0.88 | 0.75 | 0.61 | 0.52 | 0.47 |

| edna | 0.0 | 0.88 | 0.78 | 0.67 | 0.59 | 0.54 |

| GraphMatcher | 0.0 | 0.82 | 0.77 | 0.71 | 0.65 | 0.62 |

| TOMATO | 0.0 | 0.1 | 0.12 | 0.17 | 0.31 | 0.68 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | ||||||

| StringEquiv | 0.0 | 0.07 | 0.05 | 0.03 | 0.02 | 0.02 |

| Matcha | 0.76 | 0.49 | 0.48 | 0.47 | 0.47 | 0.46 |

| LogMapLt | 0.0 | 0.24 | 0.24 | 0.23 | 0.22 | 0.22 |

| LSMatch | ||||||

| ATMatcher | ||||||

| ALIOn | ||||||

| AMD | ||||||

| ALIN | ||||||

| LogMap | 0.79 | 0.62 | 0.5 | 0.39 | 0.31 | 0.28 |

| KGMatcher+ | ||||||

| edna | 0.0 | 0.21 | 0.18 | 0.14 | 0.12 | 0.11 |

| GraphMatcher | 0.94 | 0.65 | 0.51 | 0.39 | 0.32 | 0.28 |

| TOMATO | 0.0 | 0.06 | 0.07 | 0.1 | 0.17 | 0.33 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.84 | 0.74 | 0.63 | 0.54 | 0.5 |

| StringEquiv | 0.0 | 0.8 | 0.68 | 0.56 | 0.47 | 0.43 |

| Matcha | 0.0 | 0.38 | 0.22 | 0.13 | 0.09 | 0.08 |

| LogMapLt | 0.0 | 0.73 | 0.67 | 0.59 | 0.53 | 0.5 |

| LSMatch | 0.0 | 0.88 | 0.72 | 0.57 | 0.47 | 0.42 |

| ATMatcher | 0.0 | 0.74 | 0.69 | 0.62 | 0.56 | 0.53 |

| ALIOn | 0.0 | 0.75 | 0.51 | 0.34 | 0.26 | 0.22 |

| AMD | 0.0 | 0.87 | 0.72 | 0.58 | 0.48 | 0.43 |

| ALIN | 0.0 | 0.88 | 0.75 | 0.61 | 0.52 | 0.47 |

| LogMap | 0.0 | 0.81 | 0.75 | 0.68 | 0.61 | 0.58 |

| KGMatcher+ | 0.0 | 0.88 | 0.71 | 0.55 | 0.45 | 0.4 |

| edna | 0.0 | 0.79 | 0.7 | 0.59 | 0.51 | 0.47 |

| GraphMatcher | 0.0 | 0.8 | 0.74 | 0.67 | 0.6 | 0.57 |

| TOMATO | 0.0 | 0.09 | 0.11 | 0.16 | 0.29 | 0.63 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.8 | 0.73 | 0.65 | 0.59 | 0.55 |

| StringEquiv | 0.0 | 0.83 | 0.71 | 0.58 | 0.5 | 0.45 |

| Matcha | ||||||

| LogMapLt | 0.0 | 0.79 | 0.7 | 0.6 | 0.53 | 0.49 |

| LSMatch | 0.0 | 0.83 | 0.71 | 0.58 | 0.5 | 0.45 |

| ATMatcher | 0.0 | 0.7 | 0.67 | 0.63 | 0.59 | 0.57 |

| ALIOn | 0.0 | 0.67 | 0.48 | 0.33 | 0.25 | 0.22 |

| AMD | 0.0 | 0.82 | 0.71 | 0.59 | 0.5 | 0.46 |

| ALIN | 0.0 | 0.82 | 0.73 | 0.62 | 0.54 | 0.5 |

| LogMap | 0.0 | 0.79 | 0.73 | 0.66 | 0.6 | 0.57 |

| KGMatcher+ | 0.0 | 0.83 | 0.7 | 0.57 | 0.48 | 0.43 |

| edna | 0.0 | 0.82 | 0.72 | 0.61 | 0.52 | 0.48 |

| GraphMatcher | 0.0 | 0.78 | 0.73 | 0.66 | 0.6 | 0.57 |

| TOMATO | 0.0 | 0.09 | 0.11 | 0.16 | 0.28 | 0.61 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | ||||||

| StringEquiv | 0.0 | 0.07 | 0.05 | 0.03 | 0.02 | 0.02 |

| Matcha | 0.76 | 0.49 | 0.48 | 0.47 | 0.47 | 0.46 |

| LogMapLt | 0.0 | 0.24 | 0.24 | 0.23 | 0.22 | 0.22 |

| LSMatch | ||||||

| ATMatcher | ||||||

| ALIOn | ||||||

| AMD | ||||||

| ALIN | ||||||

| LogMap | 0.79 | 0.62 | 0.5 | 0.39 | 0.31 | 0.28 |

| KGMatcher+ | ||||||

| edna | 0.0 | 0.21 | 0.18 | 0.14 | 0.12 | 0.11 |

| GraphMatcher | 0.94 | 0.65 | 0.51 | 0.39 | 0.32 | 0.28 |

| TOMATO | 0.0 | 0.06 | 0.07 | 0.1 | 0.17 | 0.33 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.8 | 0.7 | 0.59 | 0.51 | 0.47 |

| StringEquiv | 0.0 | 0.76 | 0.64 | 0.52 | 0.43 | 0.39 |

| Matcha | 0.0 | 0.38 | 0.2 | 0.12 | 0.08 | 0.07 |

| LogMapLt | 0.0 | 0.68 | 0.62 | 0.54 | 0.48 | 0.45 |

| LSMatch | 0.0 | 0.83 | 0.68 | 0.53 | 0.44 | 0.39 |

| ATMatcher | 0.0 | 0.7 | 0.64 | 0.58 | 0.52 | 0.49 |

| ALIOn | 0.0 | 0.67 | 0.45 | 0.3 | 0.22 | 0.19 |

| AMD | 0.0 | 0.82 | 0.67 | 0.53 | 0.44 | 0.39 |

| ALIN | 0.0 | 0.82 | 0.69 | 0.56 | 0.48 | 0.43 |

| LogMap | 0.0 | 0.77 | 0.71 | 0.63 | 0.57 | 0.53 |

| KGMatcher+ | 0.0 | 0.83 | 0.66 | 0.51 | 0.42 | 0.37 |

| edna | 0.0 | 0.74 | 0.65 | 0.54 | 0.47 | 0.43 |

| GraphMatcher | 0.0 | 0.76 | 0.7 | 0.62 | 0.56 | 0.53 |

| TOMATO | 0.0 | 0.09 | 0.11 | 0.16 | 0.28 | 0.57 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.79 | 0.73 | 0.66 | 0.6 | 0.57 |

| StringEquiv | 0.0 | 0.83 | 0.72 | 0.61 | 0.52 | 0.48 |

| Matcha | ||||||

| LogMapLt | 0.0 | 0.78 | 0.71 | 0.62 | 0.56 | 0.52 |

| LSMatch | 0.0 | 0.83 | 0.72 | 0.61 | 0.52 | 0.48 |

| ATMatcher | 0.0 | 0.69 | 0.67 | 0.64 | 0.61 | 0.59 |

| ALIOn | 0.0 | 0.66 | 0.48 | 0.34 | 0.26 | 0.23 |

| AMD | 0.0 | 0.82 | 0.72 | 0.61 | 0.52 | 0.48 |

| ALIN | 0.0 | 0.82 | 0.74 | 0.64 | 0.56 | 0.52 |

| LogMap | 0.0 | 0.78 | 0.74 | 0.68 | 0.63 | 0.6 |

| KGMatcher+ | 0.0 | 0.83 | 0.71 | 0.58 | 0.5 | 0.45 |

| edna | 0.0 | 0.82 | 0.73 | 0.63 | 0.55 | 0.51 |

| GraphMatcher | 0.0 | 0.77 | 0.73 | 0.67 | 0.62 | 0.59 |

| TOMATO | 0.0 | 0.09 | 0.11 | 0.16 | 0.29 | 0.65 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | ||||||

| StringEquiv | 0.0 | 0.07 | 0.05 | 0.03 | 0.02 | 0.02 |

| Matcha | 0.92 | 0.7 | 0.59 | 0.48 | 0.4 | 0.36 |

| LogMapLt | 0.0 | 0.24 | 0.24 | 0.23 | 0.22 | 0.22 |

| LSMatch | ||||||

| ATMatcher | ||||||

| ALIOn | ||||||

| AMD | ||||||

| ALIN | ||||||

| LogMap | 0.79 | 0.62 | 0.51 | 0.4 | 0.32 | 0.29 |

| KGMatcher+ | ||||||

| edna | 0.0 | 0.21 | 0.18 | 0.14 | 0.12 | 0.11 |

| GraphMatcher | 0.94 | 0.65 | 0.52 | 0.4 | 0.33 | 0.29 |

| TOMATO | 0.0 | 0.06 | 0.07 | 0.1 | 0.17 | 0.33 |

| Matcher | Threshold | Precision | F.5-measure | F1-measure | F2-measure | Recall |

|---|---|---|---|---|---|---|

| SEBMatcher | 0.0 | 0.79 | 0.7 | 0.6 | 0.52 | 0.48 |

| StringEquiv | 0.0 | 0.76 | 0.65 | 0.53 | 0.45 | 0.41 |

| Matcha | 0.0 | 0.37 | 0.2 | 0.12 | 0.08 | 0.07 |

| LogMapLt | 0.0 | 0.68 | 0.62 | 0.56 | 0.5 | 0.47 |

| LSMatch | 0.0 | 0.83 | 0.69 | 0.55 | 0.46 | 0.41 |

| ATMatcher | 0.0 | 0.69 | 0.64 | 0.59 | 0.54 | 0.51 |

| ALIOn | 0.0 | 0.66 | 0.44 | 0.3 | 0.22 | 0.19 |

| AMD | 0.0 | 0.82 | 0.68 | 0.55 | 0.46 | 0.41 |

| ALIN | 0.0 | 0.82 | 0.7 | 0.57 | 0.48 | 0.44 |

| LogMap | 0.0 | 0.76 | 0.71 | 0.64 | 0.59 | 0.56 |

| KGMatcher+ | 0.0 | 0.83 | 0.67 | 0.52 | 0.43 | 0.38 |

| edna | 0.0 | 0.74 | 0.66 | 0.56 | 0.49 | 0.45 |

| GraphMatcher | 0.0 | 0.75 | 0.7 | 0.63 | 0.58 | 0.55 |

| TOMATO | 0.0 | 0.09 | 0.11 | 0.16 | 0.28 | 0.6 |

Table below summarizes performance results of tools that participated in the last 2 years of OAEI Conference track with regard to reference alignment rar2.

Based on this evaluation, we can see that four of the matching tools (KGMatcher+, LogMap, LogMapLt, LSMatch) did not change the results, and two very slightly decreased the performance (AMD, and ATMatcher).

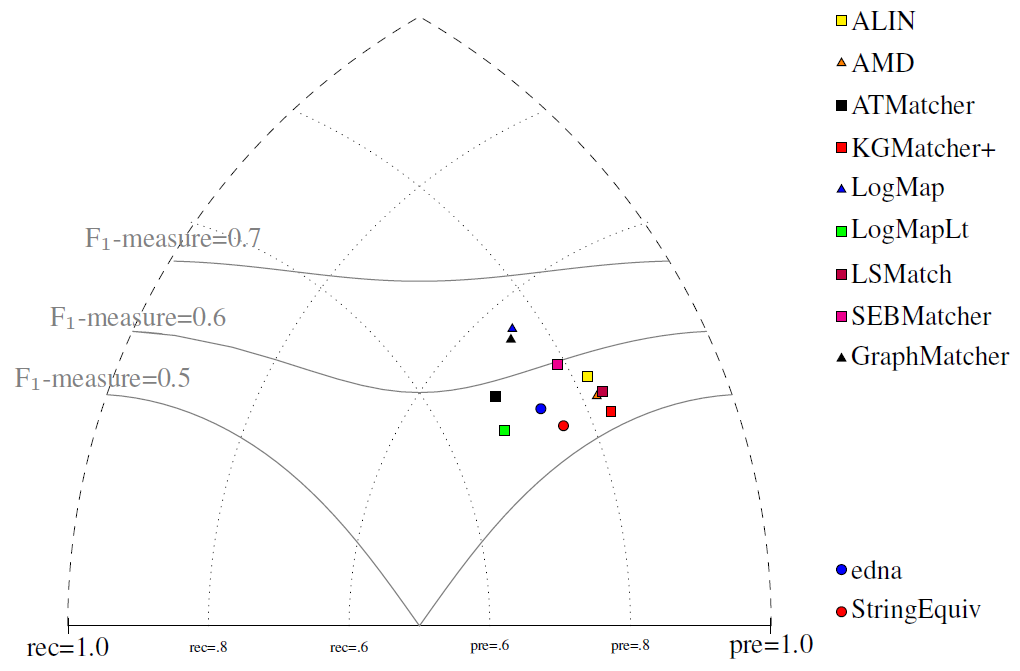

All tools are visualized in terms of their performance regarding an average F1-measure in the figure below. Tools are represented as squares or triangles. Baselines are represented as circles. Horizontal line depicts level of precision/recall while values of average F1-measure are depicted by areas bordered by corresponding lines F1-measure=0.[5|6|7].

Based on the evaluation variants M1 and M2, seven matchers (AMD, ALIN, ALIOn, ATMatcher, KGMatcher+, LSMatch, and SEBMatcher) do not match properties at all. On the other side, Matcha does not match classes at all, while it dominates in matching properties. Naturally, this has a negative effect on overall tools performance within the M3 evaluation variant.

[1] Michelle Cheatham, Pascal Hitzler: Conference v2.0: An Uncertain Version of the OAEI Conference Benchmark. International Semantic Web Conference (2) 2014: 33-48.

[2] Alessandro Solimando, Ernesto Jiménez-Ruiz, Giovanna Guerrini: Detecting and Correcting Conservativity Principle Violations in Ontology-to-Ontology Mappings. International Semantic Web Conference (2) 2014: 1-16.

[3] Alessandro Solimando, Ernesto Jiménez-Ruiz, Giovanna Guerrini: A Multi-strategy Approach for Detecting and Correcting Conservativity Principle Violations in Ontology Alignments. OWL: Experiences and Directions Workshop 2014 (OWLED 2014). 13-24.

[4] Ondřej Zamazal, Vojtěch Svátek. The Ten-Year OntoFarm and its Fertilization within the Onto-Sphere. Web Semantics: Science, Services and Agents on the World Wide Web, 43, 46-53. 2018.

[5] Martin Šatra, Ondřej Zamazal. Towards Matching of Domain Ontologies to Cross-Domain Ontology: Evaluation Perspective. Ontology Matching 2020.