The growth of the ontology alignment area in the past ten years has led to the development of many ontology alignment tools. After several years of experience in the OAEI, we observed that the results can only be slightly improved in terms of the alignment quality (precision/recall resp. F-measure). Based on this insight, it is clear that fully automatic ontology matching approaches slowly reach an upper bound of the alignment quality they can achieve. A work by (Jimenez-Ruiz et al., 2012) has shown that simulating user interactions with 30% error rate during the alignment process has led to the same results as non-interactive matching. Thus, in addition to the validation of the automatically generated alignments by domain experts, we believe that there is further room for improving the quality of the generated alignments by incorporating user interaction. User involvement during the matching process has been identified as one of the challenges in front of the ontology alignment community by (Shvaiko et al., 2013) and user interaction with a system is an integral part of it.

At the same time with the tendency of increasing ontology sizes, the alignment problem also grows. It is not feasible for a user to, for instance validate all candidate mappings generated by a system, i.e., tool developers should aim at reducing unnecessary user interventions. All required efforts of the human have to be taken into account and it has to be in an appropriate proportion to the result. Thus, beside the quality of the alignment, other measures like the number of interactions are interesting and meaningful to decide which matching system is best suitable for a certain matching task. By now, all OAEI tracks focus on fully automatic matching and semi-automatic matching is not evaluated although such systems already exist, e.g., overview in (Ivanova et al., 2015). As long as the evaluation of such systems is not driven forward, it is hardly possible to systematically compare the quality of interactive matching approaches.

In this forth edition of the Interactive track we use three OAEI datasets, namely Conference, Anatomy and Large Biomedical Ontologies (Large Bio) dataset. The Conference dataset covers 16 ontologies describing the domain of conference organization. We only use the test cases for which an alignment is publicly available (altogether 21 alignments/tasks). The Anatomy dataset includes two ontologies (1 task), the Adult Mouse Anatomy (AMA) ontology and a part of the National Cancer Institute Thesaurus (NCI) describing the human anatomy. Finally, the Large Bio consists of 6 tasks with different sizes ranging from tens to hundreds of thousands classes and aims at finding alignments between the Foundational Model of Anatomy (FMA), SNOMED CT, and the National Cancer Institute Thesaurus (NCI).

The quality of the generated alignments in Conference, Anatomy and Large Bio tracks has been constantly increasing but in most cases only by a small amount (by a few percent). For example, in the Conference track in 2013, the best system according to F-measure (YAM++) achieved a value of 70% (Cuenca Grau et al., 2013). On the other hand, while the best result according to F-measure for the Anatomy track was achieved last year by AML with 94% there has been very little improvement over previous few campaigns (only few percent). This shows that there is room for improvement, which could be filled by interactive means.

The interactive matching track was organized at OAEI 2016 for the fourth time. The goal of this evaluation is to simulate interactive matching (see (Dragisic et al., 2016) and (Paulheim et al., 2013)), where a human expert is involved to validate mappings found by the matching system. In the evaluation, we look at how interacting with the user improves the matching results. The SEALS client was modified to allow interactive matchers to ask an oracle. The interactive matcher can present a correspondence to the oracle, which then tells the system whether the correspondence is right or wrong. This year we have extended this functionality - a matcher can present simultaneously several correspondences to the oracle. Similarly to the last year, this year, in addition to emulating the perfect user, we also consider domain experts with variable error rates which reflects a more realistic scenario where a (simulated) user does not necessarily provide a correct answer. We experiment with three different error rates, 0.1, 0.2 and 0.3. The errors were randomly introduced into the reference alignment with given rates.

The evaluations of the Conference and Anatomy datasets were run on a server with 3.46 GHz (6 cores) and 8GB RAM allocated to the matching systems. Each system was run ten times and the final result of a system for each error rate represents the average of these runs. This is the same configuration which was used in the non-interactive version of the Anatomy track and runtimes in the interactive version of this track are therefore comparable. For the Conference dataset with the ra1 alignment, we considered macro-average of precision and recall of different ontology pairs, while the number of interactions represent the total number of interactions in all tasks. Finally, the ten runs are averaged. The Large Bio dataset evaluation (each system was run one time) was run on a Ubuntu Laptop with an Intel Core i7-4600U CPU @ 2.10GHz x 4 and allocating 15Gb of RAM.

Overall, four systems participated in the Interactive matching track: Alin, AML, LogMap and XMap. The systems AML and LogMap have been further developed compared to the last year, XMap participated in this track for the first time. This is also the first participation in the track (and in the OAEI) for Alin. All systems participating in the Interactive track support both interactive and non-interactive matching. This allows us to analyze how much benefit the interaction brings for the individual system.

The different systems involve the user at different points of the execution and employ the user input in different ways. Therefore, we describe how the interaction is done by each system. AML starts interacting with the user during the Selection and Repairing phases (for the Large Bio task only non-interactive repair is employed) at the end of the matching process. The user input is employed to filter the mappings included in the final alignment and AML does not generate new mappings or adjust matching parameters based on it. AML avoids asking the same question more than once by keeping track of already asked questions and uses a query limit and other strategies to stop asking the user and revert to the non-interactive mode. Alin generates an initial set of candidate mappings between classes based on 6 string similarity metrics and a stable marriage algorithm. It uses a stable marriage algorithm with Incomplete Preference Lists which means that neither the sizes of the two sets nor the preference lists need to be with the same lengths. The stable marriage algorithm is run for each similarity metric with a preference list of size one. A pair of classes is considered a candidate mapping if its preference list only contains the other class in the pair for one similarity metric. If a candidate mapping has the maximum similarity value, i.e., 1, for all six metrics than it is directly added to the final alignment. The rest are sorted by the sum of the metrics and are presented one by one to the user. If a full entity name in a candidate mapping is the same as another entity name in another candidate mapping they are shown to the expert simultaneously (up to three at the same time). Alin does not ask the same question more than once. When the user accepts a candidate mapping, it is moved to the final alignment. The data and object correspondences related to the accepted mapping are then added to the candidate mappings list and all candidate mappings that are part of alignment anti-patterns with the approved mapping are removed from the list with the candidates. The process continues until there are no more candidate mappings left. LogMap generates candidate mappings first and then employs different techniques (lexical, structural and reasoning-based) to discard some of them during the Assessment phase. During this phase in the interactive mode it interacts with the user and presents to him/her these mappings which are not clear-cut cases. XMap uses various similarity measures to generate candidate mappings. It applies two thresholds to filter the candidate mappings - one for the mappings that are directly added to the final alignment and another for those that are presented to the user for validation. The latter threshold is selected to be high in order to minimize the number of requests and the rejected candidate mappings from the oracle; the requests are mainly about incorrect mappings. The mappings accepted by the user are moved to the final alignment.

| Measure | Description | Meaning for the evaluation |

|---|---|---|

| Precision, Recall, F-measure | Measure the tools performance against the fixed reference alignment. | Show in absolute terms how the tools performed in the matching problem. |

| Precision Oracle, Recall Oracle and F-measure Oracle | Measure the tools performance against the reference as modified by the oracle's errors. | Show how the tool is impacted by the errors. |

| Precision and Negative Precision | Measure the performance of the oracle itself. | Show what type of errors the oracle made, and thus explain the performance of the tool when faced with these errors. |

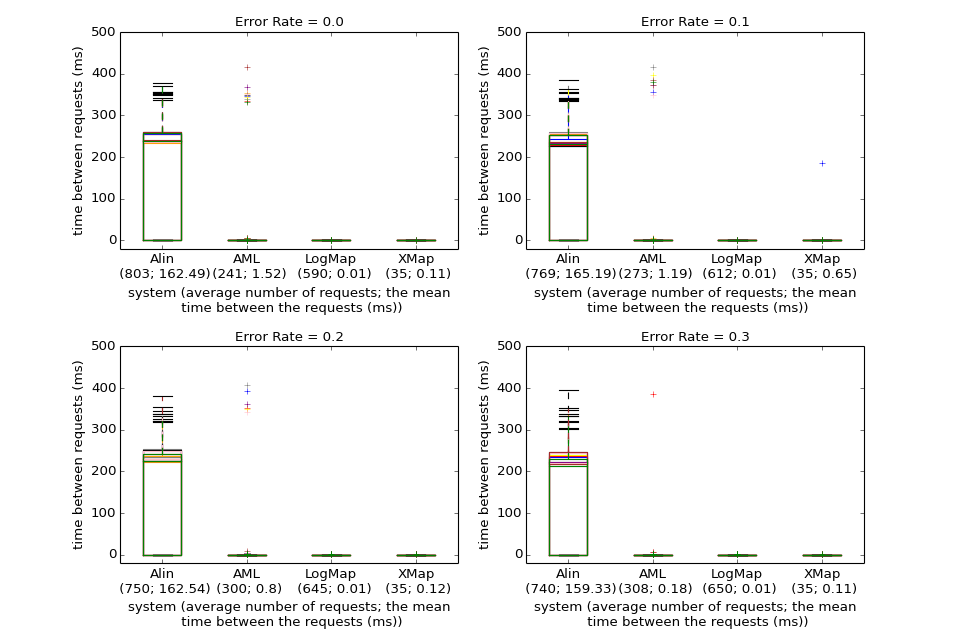

The four tables below present the results for the Anatomy dataset with four different error rates. The first two columns in each of the tables present the run times (in seconds) and the size of the alignment. The next eight columns present the precision, recall, F-measure and recall++ obtained from the interactive and non-interactive tracks for the Anatomy dataset. The measure recall+ indicates the amount of detected non-trivial equivalence correspondences. To calculate it the trivial correspondences (those with the same normalized label including those in the oboInOwlnamespace as well) have been removed as well as correspondences expressing relations different from equivalence. The meaning of the other columns is described above at the beginning of the Results section. Fig. 1 shows the time intervals between the questions to the user/oracle for the different systems and error rates for the ten runs (the runs are depicted with different colors).

We first compare the performance of the four systems with an all-knowing oracle (0.0 error rate), in terms of precision, recall and F-measure, to the results obtained in the non-interactive Anatomy track ("Precision", "Recall", "F-measure", "Recall+", "Precision Non Inter", "Recall Non Inter", "F-measure Non Inter" and "Recall+ Non Inter"). The effect of introducing interactions with the oracle/user is mostly pronounced for the Alin system and especially for its recall measure - Alin's recall increases more than 2 times. On the opposite side is XMap - it benefits the least from the interaction with the oracle. All XMap's measures differ with less than 0.2% from the non-interactive runs. At the same time AML's recall increases with less than 2% and LogMap's recall does not change. User interactions affect differently the precision measure - it increases for all systems and it is most advantageous for LogMap which precision increases with almost 8%. Consequently, the Alin's F-measure increases the most (from 0.5 to 0.854), the F-measure for LogMap and AML slightly changes. AML has the highest F-measure for this error rate as well as for the rest of the tested error rates. It is worth noting that Alin detects only trivial correspondences in the non-interactive track ("Recall+ Non Inter" = 0), introducing user interactions led to detecting some non-trivial correspondences ("Recall+" = 0.35). Thus it seems that Alin is relying only on user interaction to generate non-trivial mappings. The recall+ slightly improves for AML and LogMap and with around 1% for XMap. AML generates the largest alignment, XMap uses the least number of requests to the oracle while Alin generates the smallest alignment with 3 times more user interactions and about 5 times more distinct mappings then AML.

With the introduction of an erroneous oracle/user and moving towards higher error rates the systems' performance, obviously, starts to slightly deteriorate in comparison to the all-knowing oracle. Very noticeably XMap performance does not change at all with the increasing error rates. For the other three we can observe that the changes in the error rates influence the systems differently in comparison to the non-interactive results. While the AML performance with an all-knowing oracle is better on all measures with respect to the non-interactive results, the F-measure drops in the 0.2 and 0.3 cases, while the recall stays higher than the non-interactive results for all error rates. LogMap behaves similarly - the F-measure in the 0.3 case drops below the non-interactive results and it is the same for the 0.2 case, while the precision stays higher in all error rates. Alin's F-measure and recall are higher for all error rates in comparison to its non-interactive results, but its precision drops already in the 0.1 case. Overall the F-measure drops with about 15% for Alin between 0.0 and 0.3 cases and with 5% and 3% respectively for LogMap and AML. Now let us examine how the precision and recall are affected when moving from 0.0 to 0.3 error rates in connection to the values in the last two columns - "Precision" and "Negative Precision". The "Precision" value drops more than the value for "Negative Precision" for both AML and LogMap, with a very noticeable difference for AML. Taking into account that the "Precision" impacts precision and "Negative Precision" impacts recall we would expect that the precision drops more than recall for both AML and LogMap. This is actually observed - AML's precision and recall drop with respectively 5% and 1% and LogMap's - with 5% and 4%. Alin is the opposite case - its "Negative Precision" drops more than "Precision" towards the 0.3 case and we observe that its recall drops more than precision - 17% and 13% respectively. The "Precision" value drops more than the value for "Negative Precision" as well for XMap but we do not observe changes for the precision and recall likely due to the very small number of requests. Another observation is connected to the values in the "Precision Oracle" and "Recall Oracle" columns. AML values are approximately constant, which means that the impact of the errors is linear - even though it does increase the number of queries with the error rate. The impact of the errors is also linear for XMap, the corresponding measures drop even less than AML's. Alin has exactly the same precision according to the oracle; its recall drops slowly but consistently. In this case though, the drop in the recall is undoubtedly due to the fact that it decreases the number of queries with the error rate and thus captures less mappings in total. It should be safe to consider that the impact of the errors is also linear in the case of this tool. LogMap, as the last year, has a noticeable drop in both "Precision Oracle" and "Recall Oracle" which indicates a supralinear impact of errors.

While the size of the alignments produced by LogMap and AML slightly increases with the increasing error rates, the size of the Alin's alignment decreases. The same trend is observed for the number of total and distinct mappings requests that Alin makes to the user - when the error rate increases the number of questions to the user decreases. The number of total and distinct mappings requests for LogMap and AML increases with the error rate and the two values are equal for each tool across the different error rates (except one for AML), i.e., one user interaction contains one mapping and they do not ask the user the same question twice. Alin on the other side combines several mappings in one request to the user. The size of the alignment generated by XMap changes with less than a mapping on average, thus the tool is impacted by errors but on a very small scale. The number of the requests to the oracle does not change for XMap across the error rates. Another difference is observed in the ratio of correct to incorrect requests made to the oracle ("True Positives" and "True Negatives"). In the case of an all-knowing oracle Alin makes slightly more correct than incorrect requests (1.05); the opposite is true for LogMap (0.95). AML on another side makes a lot more incorrect requests than correct (0.27) and this number drops further to 0.23 in the 0.3 error rate. This ratio drops to 0.79 for LogMap as well while it increases for Alin to 1.38. XMap makes even more incorrect requests than AML and its ratio is between 0.14 to 0.17 across the error rates.

For an interactive system the time intervals at which the user is involved in an interaction are important. Fig. 1 presents a comparison between the systems regarding the time periods at which the system presents a question to the user. Across the ten runs and different error rates the AML, LogMap and XMap mean requests intervals are around 1 and 0 ms respectively. Alin's mean intervals are around 160 ms for all error rates. While there are no significant deviations of this values for both Alin and LogMap, for AML several outliers are observed, i.e., the interval between few of the AML requests took up to 400 ms. The run times between the different error rates slightly decrease for Alin while there is no significant change for LogMap, AML and XMap. AML, LogMap and XMap generate an alignment for less than a minute with LogMap performing twice as faster as AML.

The take away of this analyses is that all systems perform better with an all-knowing oracle than in the non-interactive Anatomy track. XMap benefits the least from the interaction with the oracle. Alin performs better for all error rates in the interactive evaluation than in the non-interactive one. It seems that Alin is relying only on user interaction to generate non-trivial mappings. For AML all three measures (precision, recall and F-measure) are higher in the Interactive track with 0.0 and 0.1 error rates than in the non-interactive, for LogMap the recall in the 0.0 case is the same as its non-interactive recall and drops under it in the 0.1 error rate. The growth of the error rate impacts different measures in the different systems but noticeably the XMap performance is barely affected by user errors. The impact of the oracle errors is linear for Alin, AML and XMap and supralinear for LogMap. The ratio of correct to incorrect questions to the oracle is different in the four systems and further changes with the increasing error rates which could be an indicator of different strategies to handle user errors.

In 2015 four systems participated in the Interactive track. Unlike 2015, this year all intervals between the requests to the oracle are less than 500 ms even considering the outliers. Two of the systems that participated the last year participated this year as well - AML and LogMap; they have participated in the track since it has been established in 2013. This year they show very similar results to the results from 2015 in terms of F-measure, run times, request intervals and alignment size. This year the AML's F-measure changed with increasing error rates from 0.958 to 0.932, in 2015 - 0.962 to 0.933. The respective numbers for LogMap are 0.909 to 0.871 in 2016 and 0.909 to 0.873 for 2015. Same as the last year the growth of the error rate impacts different measures in the different systems. Similarly to the last year the impact of oracle's errors is linear for AML and supralinear for LogMap.

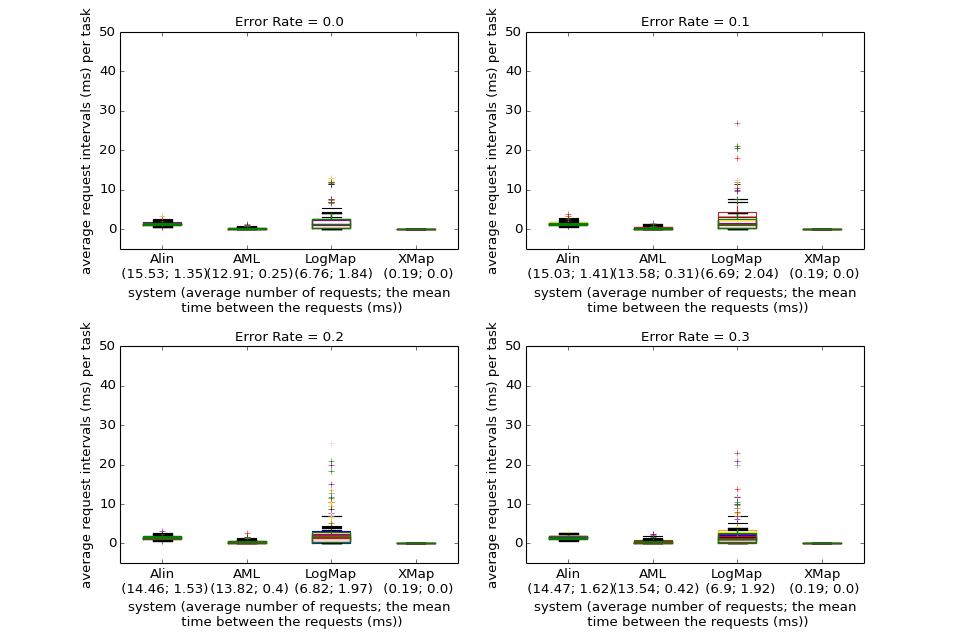

The four tables below present the results for the Conference dataset with four different error rates. The columns are described above at the beginning of the Results section. Fig. 2 shows the average requests intervals per task (21 tasks in total per run) between the questions to the user/oracle for the different systems and error rates for all tasks and the ten runs (the runs are depicted with different colors). The first number under the system name is the average number of requests and the second number is the average period of the average requests intervals for all tasks and runs.

When systems are evaluated using an all-knowing oracle, AML, Alin and LogMap present a higher F-measure than the one they obtained non-interactively. Alin shows the greatest improvement, more than doubling its F-measure, and also the highest F-measure in absolute terms when questioning the oracle. AML and LogMap improved by 8 and 4% respectively. The substantial improvement of Alin is mostly supported by gains in recall, whereas AML and LogMap show more balanced improvements. XMap results are exactly the same as for the non-interactive run which is not very surprising given that it performs only four requests to the oracle for all 21 tasks and they are all for true negatives.

When an error rate is introduced, Alin is the system that is more severely affected, loosing 10, 8 and 7% in F-measure with each increase in error rate. On the other hand, LogMap is resilient, with losses between 2 and 1%. Similarly to the performance shown for the Anatomy dataset XMap is the most resilient and it is not impacted by the oracle erros. In all tests with an error-producing oracle AML produced the highest F-measure. When comparing the systems' performance across the different error rates with their non-interactive results we note that the precision drops earlier than the recall. Alin's recall is higher than its non-interactive results even in the 0.3 (its very low recall in the non-interactive track also contributes to this result) but the precision drops already for the smallest error rate. AML's precision drops under the non-interactive results in the 0.2 case and the recall - in the 0.3. LogMap's precision drops in the 0.3 case while its recall stays almost the same as the non-interactive results. The larger drop in "Precision" than "Negative Precision" values between the 0.0 and 0.3 error rates explains the larger decrease in precision (40%, 21% and 10%) than the decrease in recall (23%, 8% and 4%) for all three systems (Alin, AML and LogMap). The impact of oracle errors ("Precision Oracle" and "Recall Oracle" columns) on the systems' behaviour for the Conference dataset is similar to the impact of the errors on their behaviour for the Anatomy dataset - it is linear for Alin and AML and supralinear for LogMap. It is also linear for XMap, we observe almost unnoticable change for its "Recall Oracle" measure.

The number of total requests posed by each system is different. LogMap poses the lower number (around 140 for every test), AML poses around 280, whereas Alin poses around 310 total requests. As mentioned earlier XMap does make only 4 request for all 21 tasks. The novel feature of analysing multiple mappings at once was apparently used by just one system, Alin, which is querying one or more individual mappings for each interaction. Analysing the values for the "True Positives" and "True Negatives" we observe that the systems mainly ask the oracle about incorrect mappings (true negatives) - in the 0.0 case the ratio is 0.34, 0.21 and 0.53 for Alin, AML and LogMap respectively. This ratio stays the same in the 0.3 case for Alin, slightly drops for LogMap to 0.49 but increases for AML to 0.33, i.e., with increasing the error rates AML starts to ask more positive questions. XMap asks mainly about true negatives but with the increasing error rates starts asking about false positives. Time between requests is low for all systems (2ms or less), with LogMap showing an increase in variance for tasks where errors are introduced. The total run times does not change between the error rates.

The take away of this analysis is that all systems, except XMap, perform better on all measures with an all-knowing oracle than in the non-interactive Conference track. In the 0.1 error rate AML and LogMap still perform better on all measures, while Alin's precision drops below its non-interactive precision. Its F-measure however stays higher than the non-interactive value even in the 0.3 case due to the huge improvement in the recall. XMap measures do not change across the error rates and are the same as for the non-interactive track.

Two of the systems also competed the last year, AML and LogMap. AML achieved slighlty worse results, with decreases in F-measure around 2-3% which were not due to a decrease in the non-interactive performance. LogMap, on the other hand, registered a slight improvement (around 1 %). AML has increased the number of requests it poses (around 50% more), whereas LogMap has essentially maintained the same number of requests.

While LogMap, XMap and AML make use of user interactions exclusively in the post-matching steps to filter their candidate mappings, Alin can also add new candidate mappings to its initial set. LogMap and AML both request feedback on only selected mapping candidates (based on their similarity patterns or their involvement in unsatisfiabilities) and only present one mapping at a time to the user. XMap also presents one mapping at a time and asks mainly for true negatives. Only Alin employs the new feature in this year's evaluation - analysing several mappings simultaneously - and can present up to three mappings together to the user if a full entity name in a candidate mapping is the same as another entity name in another candidate mapping.

With the all-knowing oracle all systems, except XMap, perform better than their non-interactive results for both datasets. Very noticeably XMap's performance does not change across the error rates and it also barely benefits from the interaction with the oracle (and only for the Anatomy dataset). Although systems' performance deteriorates when moving towards larger error rates there are still benefits from the user interaction - some of the systems' measures stay above their non-interactive values even for the larger error rates. For the Anatomy dataset different measures are affected in the different systems in comparison to their non-interactive results; for the Conference dataset the precision drops under the non-interactive values faster than the recall. The drop in precision and recall for all systems, except XMap, is larger for the Conference dataset than for the Anatomy dataset with the increasing error rates.

The impact of the oracle's errors is linear for Alin, AML and XMap and supralinear for LogMap for both datasets. One difference between the two datasets is the ratio of correct to incorrect requests to the oracle. There is a clear trend for all three systems (except XMap) in the Conference dataset, the ratio stays around and under 0.5 for all error rates. In the Anatomy dataset the ratio changes differently for each system.

Two models for system response times are frequently used in the literature (Dabrowski et al., 2011): Shneiderman and Seow take different approaches to categorize the response times. Shneiderman takes task-centred view and sort out the response times in four categories according to task complexity: typing, mouse movement (50-150 ms), simple frequent tasks (1 s), common tasks (2-4 s) and complex tasks (8-12 s). He suggests that the user is more tolerable to delays with the growing complexity of the task at hand. Unfortunately no clear definition is given for how to define the task complexity. The Seow's model looks at the problem from a user-centred perspective by considering the user expectations towards the execution of a task: instantaneous (100-200 ms), immediate (0.5-1 s), continuous (2-5 s), captive (7-10 s); Ontology alignment is a cognitively demanding task and can fall into the third or forth categories in both models. In this regard the response times (request intervals as we call them above) observed with both the Anatomy and Conference dataset fall into the tolerable and acceptable response times, and even into the first categories, in both models. The request intervals for both AML and LogMap stay around 1 ms for both datasets. Alin's request intervals are around 160 ms for the Anatomy dataset and lower for the Conference dataset. It could be the case however that the user could not take advantage of very low response times because the task complexity may result in higher user response time (analogically it measures the time the user needs to respond to the system after the system is ready).

Zlatan Dragisic, Valentina Ivanova, Patrick Lambrix, Daniel Faria, Ernesto Jimenez-Ruiz and Catia Pesquita. "User validation in ontology alignment". ISWC 2016. [paper] [technical report]

Bernardo Cuenca Grau , Zlatan Dragisic , Kai Eckert & et al. "Results of the Ontology Alignment Evaluation Initiative 2013" OM 2013. [pdf]

Heiko Paulheim, Sven Hertling, Dominique Ritze. "Towards Evaluating Interactive Ontology Matching Tools". ESWC 2013. [pdf]

Ernesto Jimenez-Ruiz, Bernardo Cuenca Grau, Yujiao Zhou, Ian Horrocks. "Large-scale Interactive Ontology Matching: Algorithms and Implementation". ECAI 2012. [pdf]

Valentina Ivanova, Patrick Lambrix, Johan Åberg. "Requirements for and evaluation of user support for large-scale ontology alignment". ESWC 2015. [publisher page]

Pavel Shvaiko, Jérôme Euzenat. "Ontology matching: state of the art and future challenges". Knowledge and Data Engineering 2013. [publisher page]

Jim Dabrowski, Ethan V. Munson. "40 years of searching for the best computer system response time". Interacting with Computers 2011. [publisher page]

This track is currently organized by Zlatan Dragisic, Daniel Faria, Valentina Ivanova, Ernesto Jimenez Ruiz, Patrick Lambrix, Huanyu Li and Catia Pesquita. If you have any problems working with the ontologies or any suggestions related to this track, feel free to write an email to ernesto [at] cs [.] ox [.] ac [.] uk or ernesto [.] jimenez [.] ruiz [at] gmail [.] com

We thank Dominique Ritze and Heiko Paulheim, the organisers of the 2013 and 2014 editions of this track, who were very helpful in the setting up of the 2015 edition.

The track is partially supported by the Optique project.