The synthesis paper describing the final results can be found here

Like in 2012, we distinguish between matching systems that have participated in the ontology matching tracks and matching systems that have participated in the instance matching track. There were 7 ontology matching tracks: benchmark, anatomy, conference, multifarm, interactive, largebio and phenotype; and 2 instance matching tracks (pm and im). This year all tracks run under SEALS.

Some systems had problems to generate results for some SEALS tracks, but this can also be counted as a result. Links to the pages describing the results for each track can be found at the bottom of the page.

the OAEI 2016 had 30 systems registered although in the end only 21 were submitted (this is in the line of OAEI 2015 which had 22 participants).

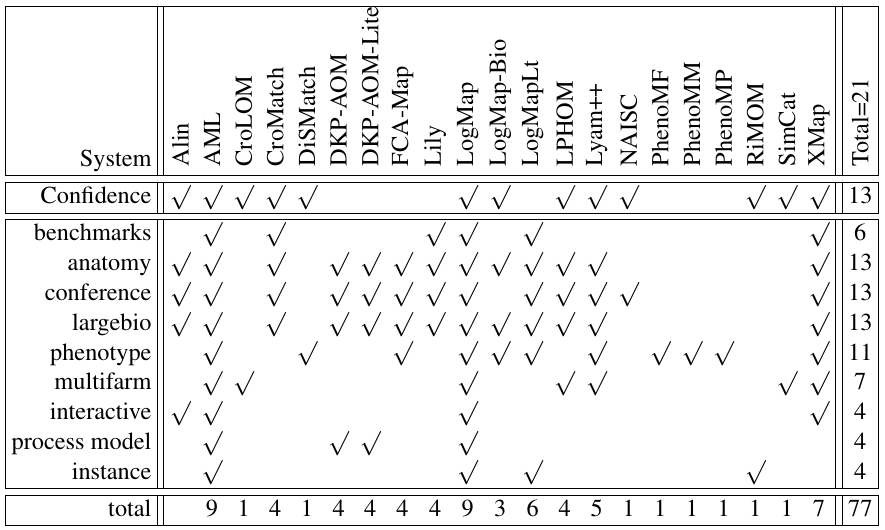

Eleven of the systems participating in OAEI 2016 had participated in previous campaigns (AML, CroMatch, DKP-AOM, DKP-AOM-Lite, Lily, LogMap, LogMapLt, LogMap-Bio, LYAM++ RiMOM, XMap), while ten were new participants (Alin, CroLOM, DisMatch, FCA-Map, LPHOM, NAISC, PhenoMF, PhenoMM, PhenoMP, SimCat). Tbale 1 shows their participation along the different OAEI tracks.

This year all systems were able to run under the SEALS platform. DiSMatch, however, was evaluated off-line by the authors since it required a large amount of resources to run.

The OAEI 2016 results are available track by track:

The synthetic result paper is available here (cite it from the Ontology matching workshop proceedings).

In case of further questions, directly contact the organizers of the specific track. Contact information can be found at the bottom of each linked results page.